I am loving all of the incredible new products and tools that have been released lately in the data space. They bring me the same joy as a child on Christmas Day. Microsoft’s Fabric and co-pilot for example, had me skipping around the office for days in giddy excitement – already many late nights have been spent testing the new functionality. I’ve also built a close bond with my good friend ChatGPT- we discuss all kinds of important topics, and I make sure I always say please and thank you.

Whilst my enthusiasm is evident, I approach this new era with a realistic perspective. These groundbreaking products will not be the right solution for every business. In fact, in certain cases, their implementation may exacerbate existing challenges – and that is in no way a reflection of the product or tool, it is just the reality of the highly individual set of circumstances most businesses find themselves in. So rather than attempting to define which use cases will or will not benefit from these bleeding-edge tools, below I focus on the five key principles that in my experience drive data-driven success across companies, regardless of their size, maturity, or industry.

According to Patrick Forth, a tech expert at Boston Consulting Group, research highlights around 75% of Australian companies lack digital maturity, hindering their ability to embrace AI fully. He emphasises that digital maturity is a prerequisite for AI maturity, stating, “You can’t be AI mature unless you’re digitally mature.” (1)

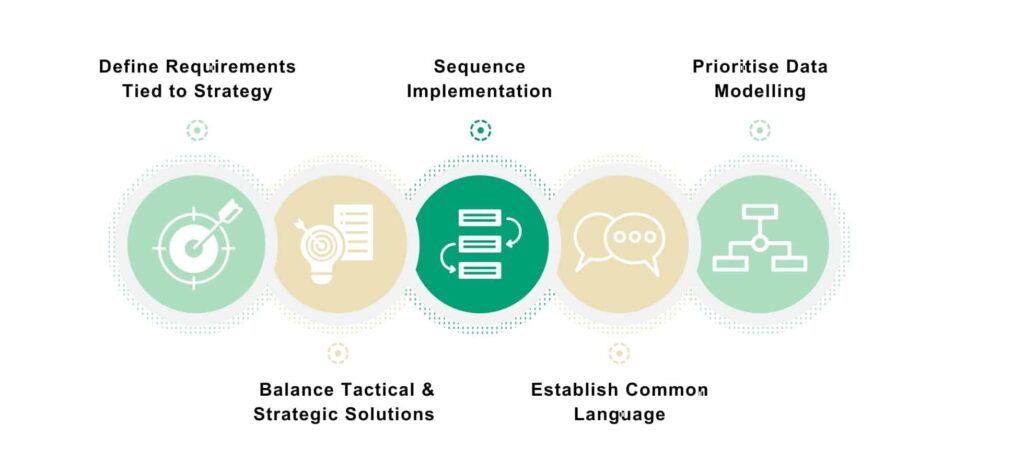

1) Establish well-defined requirements tied to a 2-3 year strategy:

Many companies rush to adopt new products without considering whether they align with their strategic objectives. Merely having a strategy is insufficient. To determine if a product is suitable, it’s essential to clearly articulate your current and future needs and capabilities. For instance, if budget constraints prohibit hiring highly specialised resources, investing in complex bleeding-edge tools may lead to suboptimal outcomes. Similarly, if your focus is on establishing basic reporting capabilities, there’s no immediate need for AI-enabled solutions. Striking the right balance between your requirements and long-term goals is crucial. Why emphasise a 2-3 year strategy? Technological advancements and business landscapes evolve rapidly. A five-year strategy can potentially hinder progress, while a one-year plan often fails to yield sustainable outcomes.

2) Understand the trade-offs between tactical and strategic solutions:

Data and analytics initiatives, like any other business endeavour, involve trade-offs. Often, due to time or resourcing constraints,companies need to prioritise tactical deliverables over building optimised, future-proofed data ecosystems. These initial wins are essential for securing stakeholder buy-in and fostering a positive data culture. However, it is crucial to view these tactical deliverables as stepping stones towards a more strategic end-goal that involves well-architected, secure, and optimised data ecosystems. They can help identify gaps in data processes that require attention, such as manually created data that is not available in any system. Moreover, they shed light on areas that need automation or modernised integration between internal systems, reducing costs and risks.

While tactical outcomes such as reporting built on Spreadsheets within PowerBi or any other modern BI tool, hold value and should be prioritised, it is important to plan for their future reconstruction and refactoring as the organisation’s data maturity progresses. Identify the business-critical reports that offer the most value in the short term, document them thoroughly, define key terms, and establish a plan for transitioning them to the future state. Granting staff unrestricted access to create their own reports in these new BI tools initially may seem appealing but can lead to a spaghetti-tangled-mess of conflicting information and manual efforts, reminiscent of the spreadsheet challenges many organisations are trying to overcome. Eventually, you may need to roll back some staff’s access to ensure consistency and control by adopting a centralised/ hub-and-spoke reporting model, which will prove challenging in terms of cultural change.

3) Avoid digital overload:

In today’s digital landscape, implementing multiple new solutions simultaneously is rarely feasible, especially when concurrently modernising the data ecosystem. Implementing modern data intelligence tools requires a deep understanding of the interconnected nature of data and systems. Rushing into their implementation without a solid foundation can lead to increased complexity and erroneous insights. While these tools may appear user-friendly and offer seemingly accurate results, without proper tagging and testing, their outputs may be misleading and difficult to identify.

There is a very real risk that implementing intelligence tools can create more problems than it resolves, amplifying underlying complexity and producing data ‘results’ that are unable to be tested or validated with confidence or accuracy.

The starting point is to outline a logical and sequential roadmap based on your desired future state architecture. Identify the source of truth for the primary data domain and build the foundation on this. Then sequentially add other systems and data sources based on prioritised business value. A well-planned and staggered implementation approach minimises technical debt, prevents change fatigue among staff, and ultimately reduces project costs while enhancing benefits and adoption.

4) Establish a common language early on:

Language plays a vital role in the world of data. Some have likened data to the language of an organisation. Implementing remarkable tools and building numerous reports won’t yield substantial results if different business areas, executives, or teams have misaligned definitions and calculations. For example, how does your organisation define a “Client”? Does it include anyone who has ever accessed any one or more of your services, even if they haven’t done so in the last two years and we are no longer getting revenue from them? Defining terms such as “Growth” is equally critical. Does it refer to an increase in enquiries or only to successful conversions? Creating a common language is paramount, while still recognising that departments may have unique perspectives and definitions. You can align key enterprise terms – such as ‘Client’, and for any department specific view, this should be given a different name say ‘Active Client’. The key here is in first identifying the misalignment, followed by gaining agreement on the different usages, new names, definitions and key business owner before documenting and ensuring these new terms enter the conversations of the business. Work closely with business leaders to drive this new language throughout the organisation, ensuring it permeates existing reports and internal communication. Starting this journey early paves the way for smoother and faster data uplifts, enabling trustworthy and actionable insights and better decision-making.

5) Prioritise data modelling:

Last but by no means least, is data modelling. Once the backbone of any data and analytics solution, data modelling has taken a backseat in recent years due to the ease of deploying tools that bypass upfront modelling. Data lakes, for example, appeared to be a game-changer, offering a respite from the arduous task of modelling system data through multiple layers of logic. Many modern tools are so intuitive that it may seem like data modelling is no longer necessary. This is a misconception. Data modelling remains crucial for ensuring secure, flexible, extensible, and usable data and the language surrounding it. Even tools like Fabric and large language models such as ChatGPT rely on well-structured data. PowerBI (one of my personal favourites) thrives when based on a star-schema data model. While it is possible to create reports without modelling, it leads to unnecessary problems including making future changes complex and hindering the platform’s true potential for flexibility and speed to delivery. Without proper data modelling practices, you risk ending up with a plethora of disjointed pipelines, virtualised views, and tables that are challenging to use, analyse, and maintain. Try attaching ChatGPT to raw, un-modelled or badly modelled data – the results you get will be likely rather unhelpful.

The extraordinary technologies becoming available are transformative in nature but not by design. Whether it’s AI, Fabric, Mesh, Lakehouse, or graph databases, success lies in formulating an implementation roadmap tied to business strategy whilst understanding tactical trade-offs, and building a solid foundation starting with primary sources of truth, strong data modelling and the development of common language patterns. By applying these five principles any organisation can deliver data-driven success, regardless of the specific technologies they choose to implement.

References

Thomson, J. (2023) Inside the chase for AI’s profit pools, Australian Financial Review. https://www.afr.com/chanticleer/inside-the-asx-chase-for-ai-s-profit-pools-20230616-p5dh4c