For one of my recent projects I was working heavily on AWS integration services to integrate a new SaaS HCM system with legacy finance software. In the process of building the integration patterns focussing on both functional and non-functional requirements, we learned some lessons and found some workarounds when using step functions to orchestrate. The following blog walks through some of these, and provides some good practices you can follow to help avoid critical failures, save time, reduce errors, and overall aid in creating a robust integration on AWS.

1. Use Retries on Lambda Failures:

A robust integration solution will not only effectively connect systems, but also needs to cope with a number of common issues. A few examples of common challenges we encountered in my recent AWS integration project are:

- Temporary database overloading of one of the integrating systems in which the Integration layer (a Lambda) is querying some data.

- Temporary network issues which lead to a “Not able to connect to Database” error, or

- Temporary API issues in one of the systems.

These issues eventually tend to indicate there is a failure in the integration layer, meaning some of the transactions have failed, and will now need to be retried.

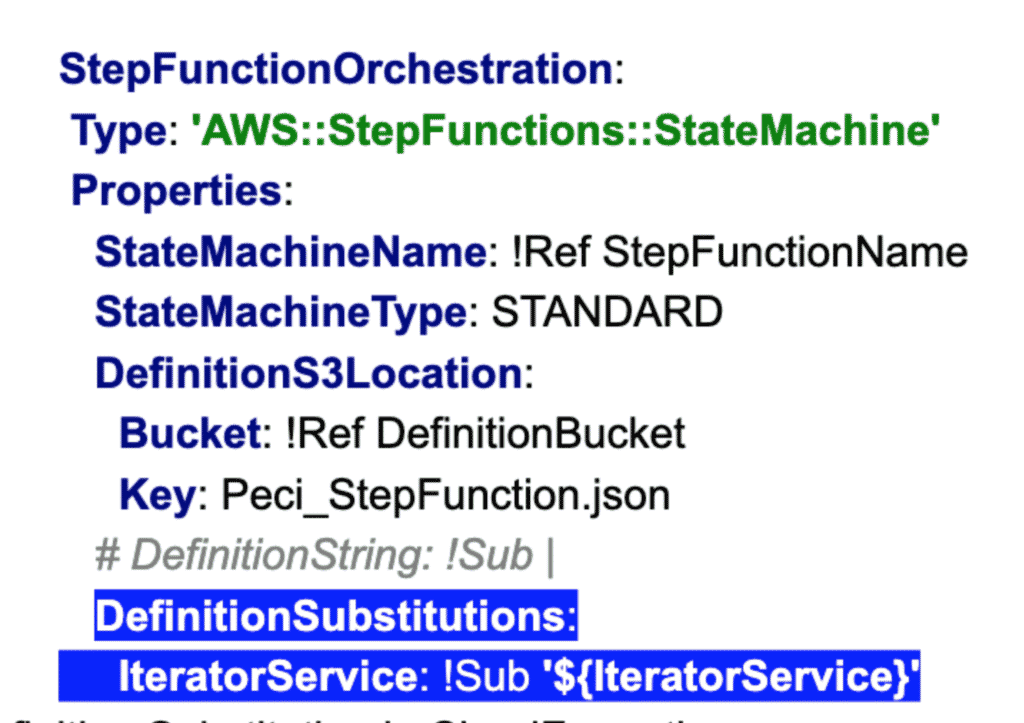

In this scenario, I would recommend that you avoid trying to implement the custom retrying solution by itself, and instead leverage Lambda’s inbuilt retry mechanism (as shown below). This tool can target specific errors and retry gradually for a specified number of times and a specific interval of time, allowing you more flexibility and accuracy, and avoiding unnecessary retries (which cost both time and money).

Figure 1: Retry in AWS Lambda

2. CloudFormation template file size:

From day 1 in the project, the time and effort was invested to keep all the infrastructure as code using AWS CloudFormation script (Infrastructure as a code – IaC) to enable fully automated deployments across multiple environments.

Though it started at just a few hundred lines of code, it ended up taking more than 20,000 lines of CloudFormation script to create AWS resources such as Lambdas (200 +), DynamoDB, S3 Buckets, Step functions and many more.

While trying to deploy the CloudFormation script one day, AWS responded back with an error that the maximum size of the CloudFormation template file was reached i.e we couldn’t create any more resources in the same template.

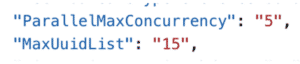

The main issue was with a single step function which was taking up more than 50% of the lines in the file. Breaking down that step function into multiple files wasn’t an easy option as it is a single unit of work, so we opted to use “DefinitionSubstitutions” (as below) which means now the CloudFormation script is broken into two files. One file contains mostly the step function and the other file contains the substitution details such as “IteratorService” in the below example.

Figure 2: Using Definition Substitution in CloudFormation

3. Implement Step Functions Parallel Processing:

If the integration is implementing a business process then there is a good possibility that the AWS step function is used to define the business process. One way to control the throughput/performance of the integration layer is to achieve parallelism in the step function. This is how we achieved it:

- DynamoDB: An entry in the dynamo DB corresponds to a business process inserted by the SaaS system

- LambdaFunction: GetTransaction Lambda function to fetch predefined number of transactions ( config driven) from DynamoDB and trigger the step function in multiple threads based on the configuration.

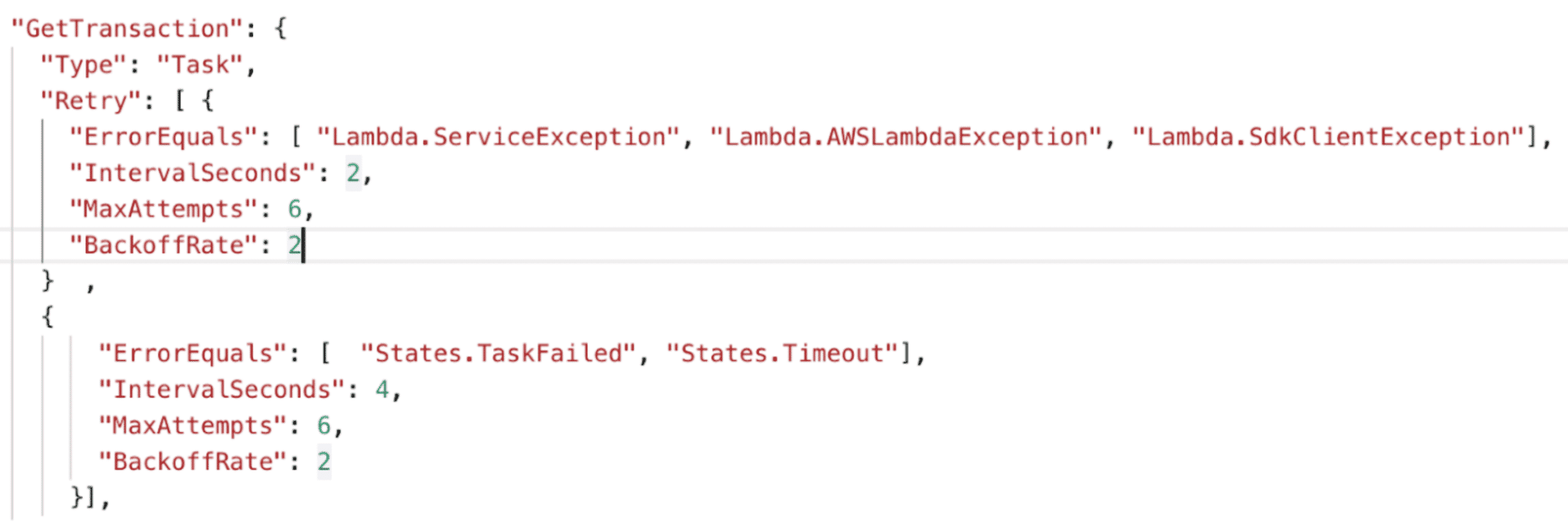

Figure 3: ParallelMaxConcurrency – Number of Threads in Parallel

MaxUuidList – Number of records to be processed in each thread.

This allowed us to control the throughput of the step function depending on the volume of transactions in production and also handle those ad-hoc planned spikes of transactions.

4. Using AWS Alarms/Alerts & Implementing Gates

It’s inevitable that something will go wrong in software, and in integration the likelihood of something going wrong is much more since there are many moving parts. To be proactive and avoid critical issues, you should look to create health checks on the integrating systems and raise alarms in Cloud Watch triggering SNS notifications via Email or SMS when something goes wrong.

On top of this, I would recommend implementing gates (just a boolean check before starting processing a business process ) to prevent data corruption or half commit transactions in the integrating systems. This will help control the transaction flow to the integrating systems in scenarios such as downtime of downstream systems.

A great and simple way to achieve gates is to create a simple table in DynamoDB with rows corresponding to high level business processes and a boolean flag. Before executing a business process (a step function) one of the initial tasks in all business processes (a Lambda) checks if the gate is open or not. If the gate is closed, the business process is marked as paused and retried later after a set interval of time.